Prometheus is itself a metric collection and storage system. However, Prometheus data endpoints (that is, endpoints that emit Prometheus metric data) follow a specific format for the emitted metric data. The Hawkular WildFly Agent has the ability to parse this Prometheus metric data format and push that metric data for storage into Hawkular which can then, of course, be used by Hawkular and its clients (for the purposes of graphing the metric data, alerting on the metric data, etc.).

I will explain how you can quickly get Hawkular to collect metric data from any Prometheus endpoint and store that data into Hawkular.

First, you need a Prometheus endpoint that emits metric data! For this simple demo, I simply ran the Prometheus Server which itself emits metrics. I did this via docker:

1. Make sure your docker daemon is running: sudo docker daemon

2. Run the Prometheus Server docker image: sudo docker run -p 9090:9090 prom/prometheus

That's it! You now have a Prometheus Server running and listening on port 9090. To see the metrics it, itself, emits, go to http://localhost:9090/metrics. We'll be asking the Hawkular WildFly Agent to collect these metrics and push them into a Hawkular Server.

Second, you need to run a Hawkular Server. I won't go into details here on how to do that. Suffice it to say, either build or download a Hawkular Server distribution and run it (if it is not a developer build, make sure you run your external Cassandra Server prior to starting your Hawkular Server - e.g. sudo docker run cassandra -p 9042:9042).

Now you want to run a Hawkular WildFly Agent to collect some of that Prometheus metric data and store it in the Hawkular Server. In this demo, I'll be running the Swarm Agent, which is simply a Hawkular WildFly Agent packaged in a single jar that you can run as a standalone app. However, its agent subsystem configuration is the same as if you were running the agent in a full WildFly Server so the configuration I'll be describing can be used no matter how you have deployed your agent.

Rather than rely on the default configuration file that comes with the Swarm Agent I extracted the default configuration file and edited it as I describe below.

I deleted all the "-dmr" related configuration settings (metric-dmr, resource-type-dmr, remote-dmr, etc). I want my agent to only collect data from my Prometheus endpoint, so no need to define all these DMR metadata. (NOTE: the Swarm Agent configuration file does already, out-of-box, support Prometheus. I will skip that for this demo - I want to explicitly explain the parts of the agent configuration that is needed to be in the configuration file).

The Prometheus portion of the agent configuration is very small. Here it is:

<managed-servers>That's it. <remote-prometheus> tells the agent where the Prometheus endpoint is and how often to pull the metric data from it. Every metric emitted by that Prometheus endpoint will be collected and stored in Hawkular.

<remote-prometheus name="My Prometheus Endpoint"

enabled="true"

interval="30"

time-units="seconds"

metric-tags="feed=%FeedId"

metric-id-template="%FeedId_%MetricName"

url="http://127.0.0.1:9090/metrics"/>

</managed-servers>

Notice I can associate my managed server with metric tags (remote-prometheus is one type of "managed server"). For every metric that is collected for this remote-prometheus managed server, those tags will be added to those metrics in the Hawkular Server (specifically in the Hawkular Metrics component). All metrics will have these same tags. In addition, any labels associated with the emitted Prometheus metrics (Prometheus metric data can have name/value pairs associated with them - Prometheus calls these labels) will be added as Hawkular tags. Similarly, the ID used to store the metrics in the Hawkular Metric component can also be customized. Both metric-tags and metric-id-template are optional. You can also place those attributes on individual metric definitions (which I describe below) which is most useful if you have specific tags you want to add only to metrics of a specific metric type but not on all of the metrics collected for your managed server.

If you wish to have the agent only collect a subset of the metrics emitted by that endpoint, then you must tell the agent which metrics you want collected. You do this via metric sets:

<metric-set-prometheus name="My Prometheus Metrics">Once you defined your metric-set-prometheus entries, you specify them in the metric-sets attribute on your <remote-prometheus> element (e.g. metric-sets="My Prometheus Metrics").

<metric-prometheus name="http_requests_total" />

<metric-prometheus name="go_memstats_heap_alloc_bytes" />

<metric-prometheus name="process_resident_memory_bytes" />

</metric-set-prometheus>

OK, now that I've got my Swarm Agent configuration in place (call it "agent.xml" for simplicity), I can run the agent and point it to my configuration file:

java -jar hawkular-swarm-agent-dist-*-swarm.jar agent.xmlAt this point I have my agent running along side of my Hawkular Server and the Prometheus Server which is the source of our metric data. The agent is collecting information from the Prometheus endpoint and pushing the collected data to Hawkular Server.

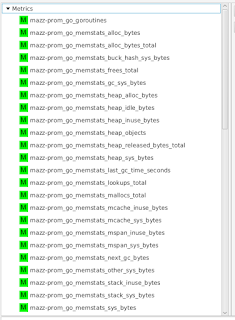

In order to visualize this collected data, I'm using the experimental developer tool HawkFX. This is simply a browser that let's you see what data is in Hawkular Inventory as well as Hawkular Metrics. When I log in, I can see all the metric data that comes directly from the Prometheus endpoint.

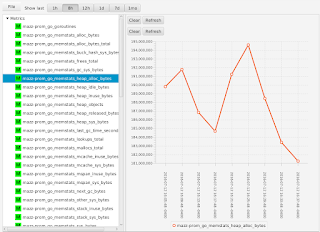

I can select a metric to see its graph:

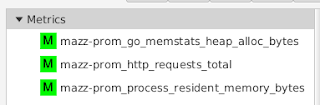

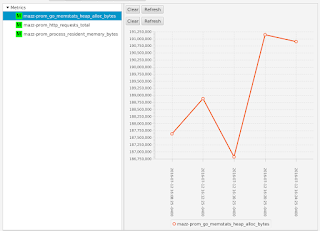

If I were to have configured my agent to only collect a subset of metrics (as I had shown earlier), I would see only those metrics that I asked to collect - all the other metrics emitted by the Prometheus endpoint are ignored:

What this all shows is that you can use Hawkular WildFly Agent to collect metric data from a Prometheus endpoint and store that data inside Hawkular.

No comments:

Post a Comment